How We Revolutionized Online Exam Proctoring with the YOLO Model: Detecting Head Movements, Voices, and More

Introduction

As online education continues to grow, ensuring the integrity of remote examinations has become a significant challenge. At Miru Technologies, we recognized this need early on and have developed an advanced online exam proctoring system powered by the YOLO (You Only Look Once) model. In this article, we’ll walk you through how we implemented this cutting-edge technology to detect head movements, unauthorized voices, and other potential signs of cheating, ensuring a fair and secure exam environment for all.

Our Approach: Leveraging YOLO for Real-Time Proctoring

At Miru Technologies, we chose YOLO due to its unparalleled speed and accuracy in real-time object detection. YOLO’s ability to process full images in a single evaluation made it the ideal choice for creating a responsive and reliable proctoring system. Here’s how we integrated YOLO into our proctoring solution to address key challenges in online exams.

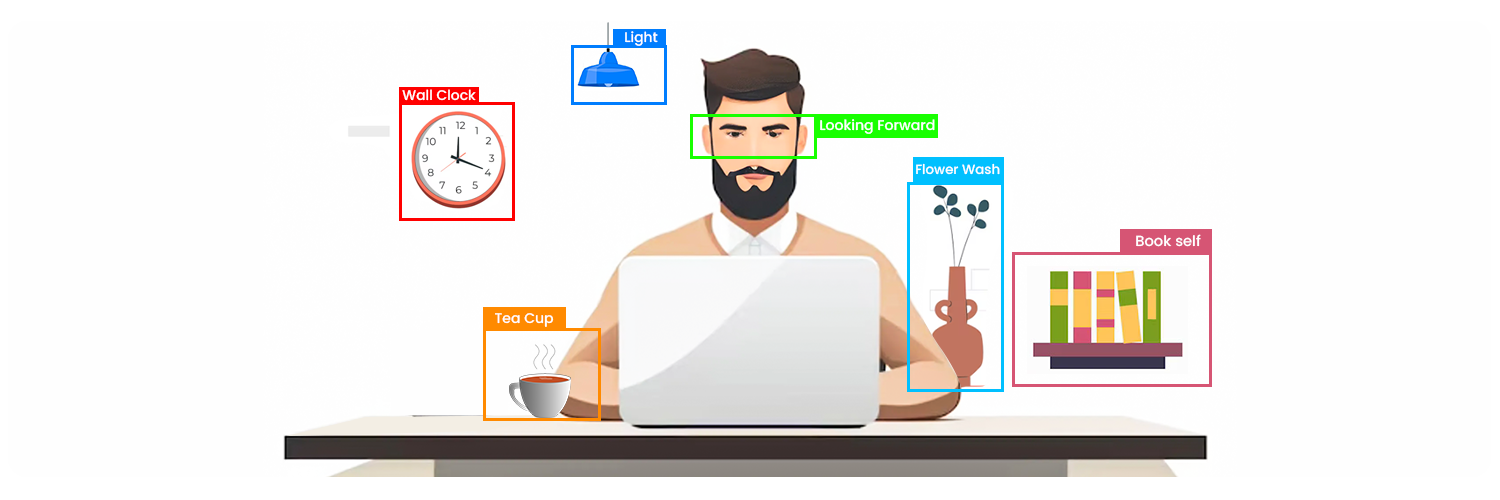

1. Detecting Suspicious Head Movements

One of the most telling signs of cheating during an online exam is unnatural head movement, such as frequently looking away from the screen. We used the YOLO model to monitor and analyze head movements in real-time.

Sample Code for Head Movement Detection

import cv2

import numpy as np

from yolov5 import YOLOv5

# Initialize YOLO model

model = YOLOv5("yolov5s.pt", device="cpu")

# Function to detect and track head movements

def detect_head_movements(frame):

results = model.predict(frame)

for result in results:

if result['label'] == 'head':

x, y, w, h = result['bbox']

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

# Further analysis of head position can be done here

return frame

# Capture video from webcam

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

frame = detect_head_movements(frame)

cv2.imshow("Proctoring - Head Movement Detection", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

- Implementation : By training YOLO to detect and track head positions, we established a baseline for normal head movements during an exam. Our system can differentiate between typical behaviors, like slight shifts, and suspicious activities, such as repeatedly glancing to the side or away from the screen.

- Real-Time Alerts : When our system detects head movements that deviate from the norm, it instantly triggers an alert. This allows human proctors to intervene if necessary or flag the incident for further review.

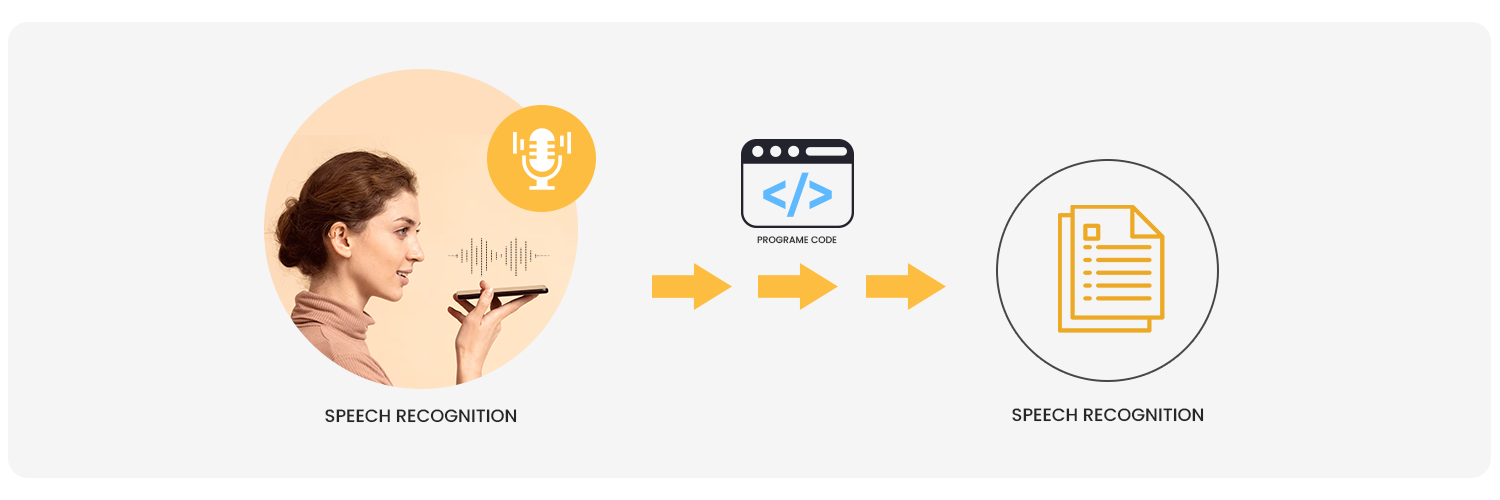

2. Monitoring Audio to Detect Unauthorized Voices

Suggested Image: An illustration or visualization of how the audio monitoring system works in tandem with YOLO. This could show an audio waveform with annotations identifying different voices, highlighting how the system detects unauthorized voices during the exam.

Collaboration or assistance during an online exam is a common concern, and detecting unauthorized voices is crucial. Our solution integrates audio processing with the YOLO model to ensure comprehensive monitoring.

Sample Code for Audio Monitoring and Voice Detection

import speech_recognition as sr

# Initialize recognizer

recognizer = sr.Recognizer()

# Function to detect unauthorized voices

def detect_voices(audio_file):

with sr.AudioFile(audio_file) as source:

audio = recognizer.record(source)

try:

# Recognize and process the audio

transcript = recognizer.recognize_google(audio)

if "unauthorized phrase" in transcript:

print("Alert: Unauthorized voice detected!")

except sr.UnknownValueError:

print("Could not understand the audio")

except sr.RequestError as e:

print(f"Could not request results; {e}")

# Example usage

detect_voices("exam_audio.wav")

- Audio Processing Integration : We’ve implemented advanced audio algorithms that work alongside YOLO. These algorithms can distinguish between the examinee’s voice and any additional voices in the room. By creating a voice print at the beginning of the exam, our system can identify and flag any discrepancies.

- Real-Time Voice Detection : If our system detects an additional voice, it immediately notifies the proctor, who can then take appropriate action. This feature is vital in preventing unauthorized collaboration during exams.

3. Identifying Suspicious On-Screen Activity

In addition to monitoring head movements and audio, our system is capable of recognizing objects and activities within the webcam’s field of view. This ensures that no unauthorized materials or devices are being used

Sample Code for Detecting Suspicious Objects

# Continuation of YOLO model implementation for object detection

def detect_suspicious_objects(frame):

results = model.predict(frame)

for result in results:

if result['label'] in ['cell phone', 'book', 'notepad']:

x, y, w, h = result['bbox']

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 0, 255), 2)

print("Alert: Suspicious object detected!")

return frame

- Object Detection : We’ve trained YOLO to identify common cheating tools, such as smartphones, notes, or additional screens. Our system scans the environment continuously and alerts proctors if any suspicious items are detected.

Balancing Innovation with Privacy

While developing this powerful proctoring system, we’ve been mindful of the importance of privacy and fairness

- Data Security : We ensure that all data collected during exams is encrypted and securely stored. Only authorized personnel can access this data, and it is used solely for its intended purpose.

- Informed Consent : Transparency is key. We make sure that all examinees are fully informed about the monitoring process and provide their consent before the exam begins

Minimizing False Positives

A significant focus of our development process was reducing the likelihood of false positives—instances where innocent behavior might be incorrectly flagged as suspicious

- Robust Training Data : We used extensive and diverse datasets to train our YOLO model, ensuring that it can accurately differentiate between normal and suspicious behaviors across various scenarios.

- Human Oversight : To further ensure accuracy, our automated system is supported by human proctors who review any flagged incidents to determine whether they require further action.

Conclusion

At Miru Technologies, we’ve successfully harnessed the power of the YOLO model to create a state-of-the-art online exam proctoring solution. Our system not only detects head movements, unauthorized voices, and suspicious on-screen activity but also respects the privacy and integrity of each examinee. This innovative approach ensures that online exams are conducted in a fair, secure, and efficient manner, setting a new standard in the field of remote education.